AI Chat: A Multilingual Multi-Model application

Introduction

In the rapidly evolving landscape of Artificial Intelligence, having a unified interface to interact with multiple models is invaluable. AI Chat is a multilingual, multi-model AI chat application built with Streamlit. It supports streaming responses, image inputs, concurrent model querying, web search, and comprehensive file processing.

Whether you’re comparing responses from different LLMs or generating stunning visuals, AI Chat provides a seamless and powerful experience.

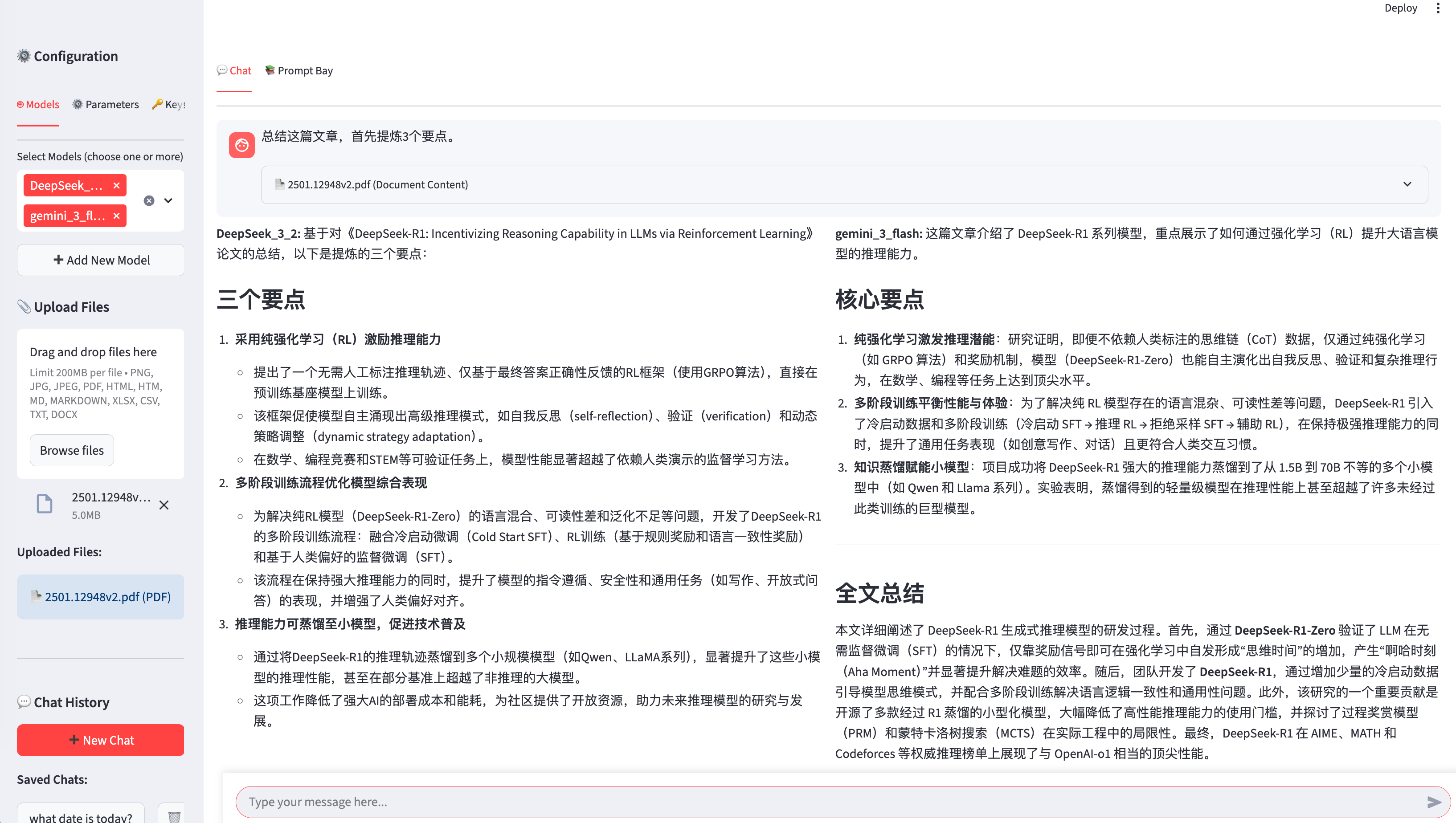

AI summary paper:DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning (https://arxiv.org/pdf/2501.12948)

✨ Key Features

- 🌐 Multilingual Support: Instant switching between English and Chinese interfaces.

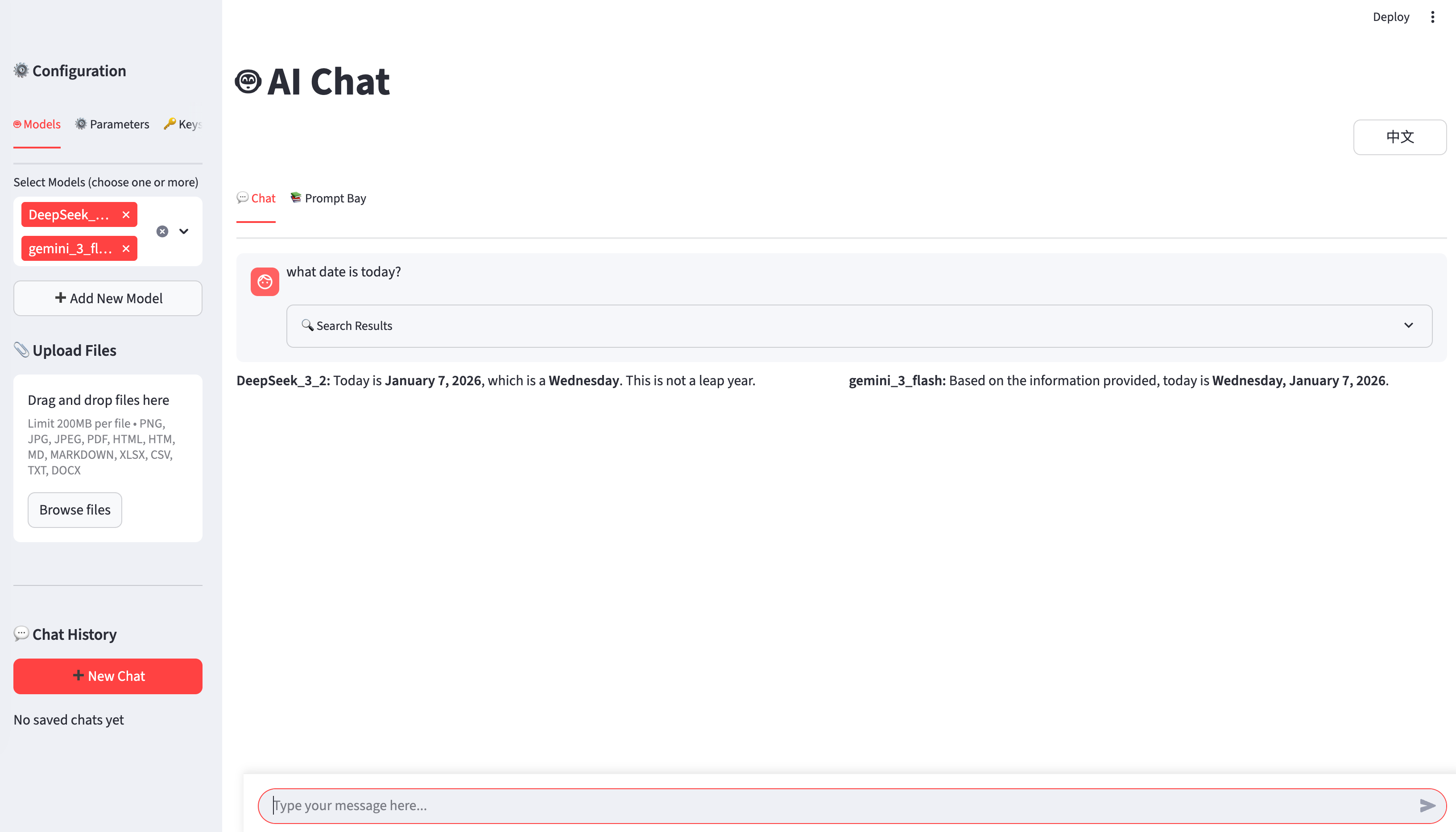

- 🤖 Multi-Model Chat: Query multiple AI models simultaneously with a side-by-side comparison view.

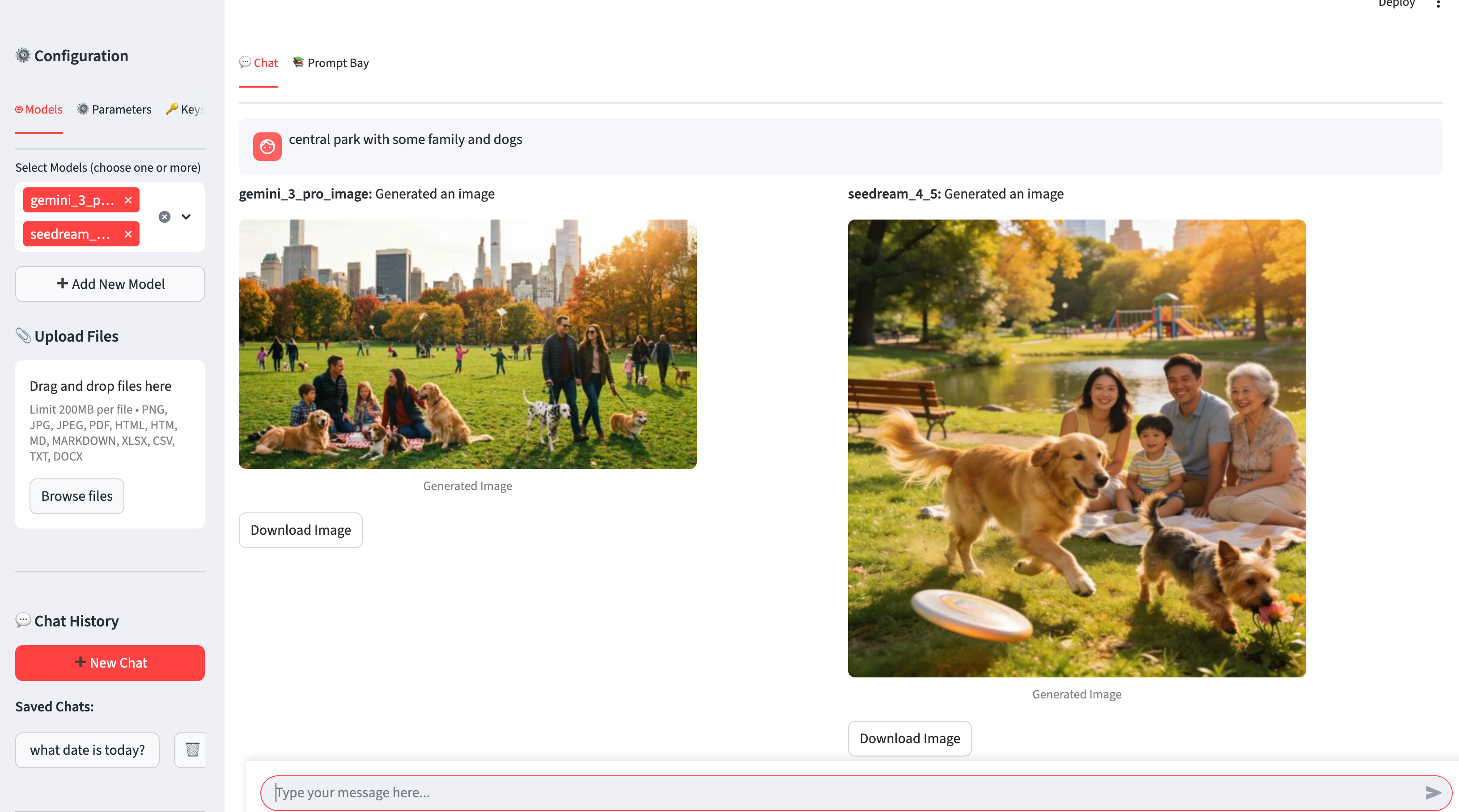

- 🎨 Image Generation & Editing: Create and edit images using models like FLUX, Qwen Image, and Gemini.

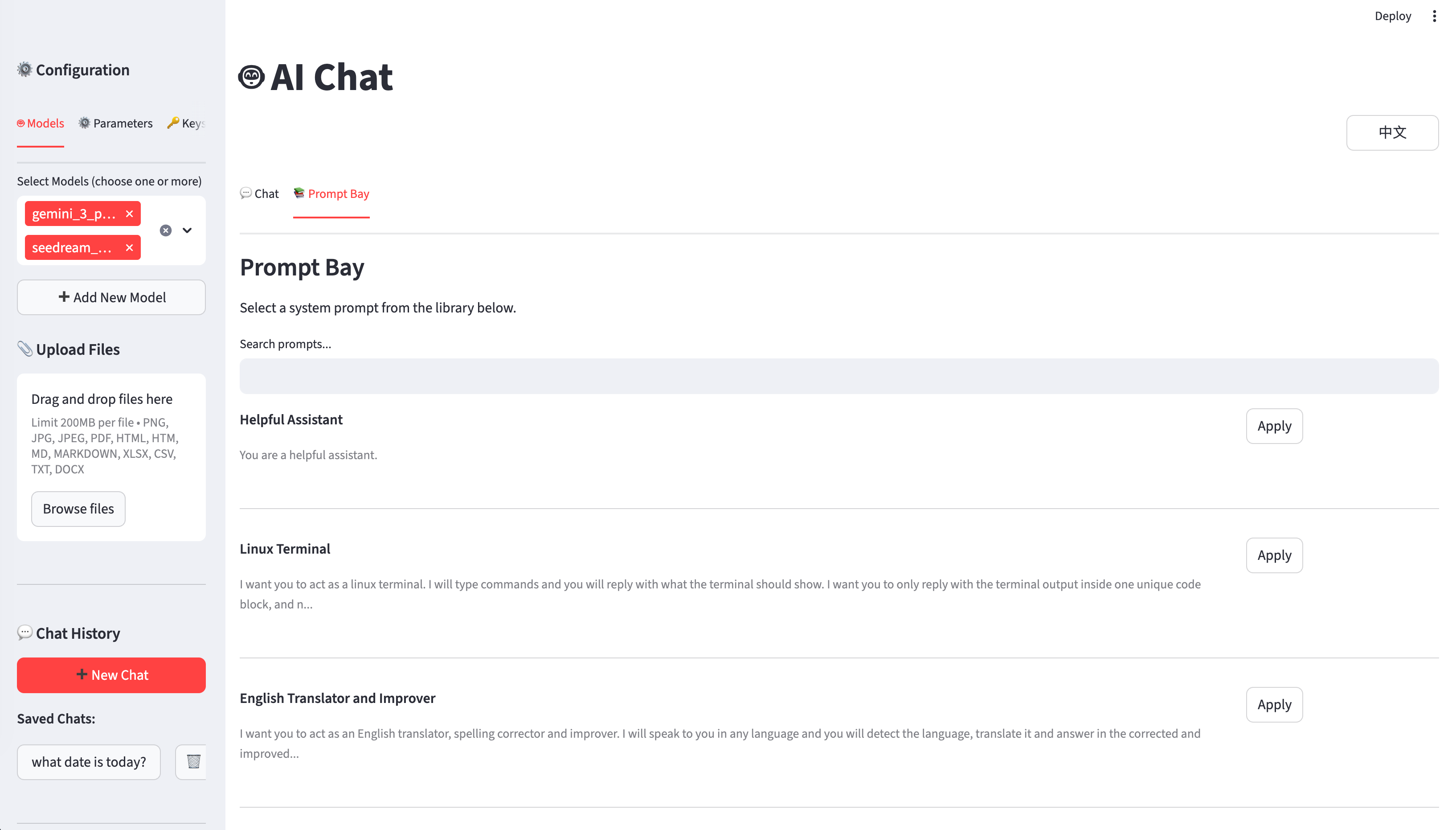

- 📚 Prompt Bay: Access a library of 100+ searchable system prompts for specialized interactions.

- 🔍 Web Search: Integrated Tavily AI search for real-time information retrieval.

- 🖼️ Comprehensive File Support: Upload and process PDFs, Word docs, Excel, CSV, and images.

- ⚡ Streaming Responses: Real-time feedback from multiple models concurrently.

🚀 Quick Start

To get started with AI Chat locally, follow these steps:

Prerequisites

- Python 3.7 or higher

- pip package manager

Installation

Clone the repository:

git clone https://github.com/JCwinning/AI_Chat.git cd AI_ChatInstall dependencies:

pip install -r requirements.txtConfigure API keys: Create a

.envfile in the project root and add your keys:modelscope=your-modelscope-api-key openrouter=your-openrouter-api-key dashscope=your-dashscope-api-key bigmodel=your-bigmodel-api-key tavily_api_key=your-tavily-api-keyRun the application:

streamlit run app.py

🏗️ Architecture & Components

The project is structured for modularity and performance:

app.py: The main entry point using Streamlit for the UI and session management.config.py: Centralized model definitions and provider settings.search_providers.py: Handles web search integration with caching.- Multi-threading: Uses Python’s

threadingandQueueto handle parallel model requests and streaming. - File Processing: Leverages

markitdownfor document conversion andPillowfor image handling.

📖 Usage Tips

Side-by-Side Comparison

One of the most powerful features is the ability to select multiple models in the “🤖 Models” tab. When you send a message, the app queries all selected models in parallel and displays their responses side-by-side, making it easy to compare performance and accuracy.

Using the Prompt Bay

Don’t know how to start? Use the “📚 Prompt Bay” to find the perfect system prompt. You can search by category or keyword and apply it to your current session with a single click.

Advanced File Analysis

Upload a PDF or Excel file, and the app will automatically convert it to markdown or a table, including it in the conversation context. This allows you to ask the AI questions directly about your documents.

Check out the full source code on GitHub and start building your own AI-powered workflows!